The new narrative in the field of Artificial Intelligence and Generative AI is DeepSeek. The most efficient and over-hyped AI model requiring no computing has literally taken the world by storm. A confirming threat to its predecessor, ChatGPT, DeepSeek, has outgrown the mere side-project for the Chinese Hedge Fund, High-Flyer.

What really gave flame to the hype-fire for DeepSeek was its low training cost of approximately $6M, which, according to some skeptics, is just a number mentioned on paper. However, the same figure boosted its popularity, especially when the comparison is drawn between other AI models like ChatGpt and Claude 3.5 Sonnet. For example, training a large model such as ChatGPT-3 required an estimated figure of $2-4M. This might sound cheaper than DeepSeek, but here is the real truth.

According to some sources, the latest edition of the model, ChatGPT-4, consumed a total technical cost of $41 million to $78 million.

Another proof of the whopping cost came straight from the mouth of Sam Altman, CEO of OpenAI. He mentioned in the past that this AI model charged more than $100 million in training costs for ChatGPT and its versions. On the other hand, Claude 3.5 cost $10s of millions.

Computing is the superset, covering processes such as performing calculations and manipulating data on a computer. Training an AI is basically a subset of computing that entails teaching a machine learning model to read and learn from data and make predictions.

So, while doing a comparative analysis between the computing and training costs for AI models, the overall picture gets more clarity.

Larger, complex models, for example, GPT-3 and Gemini Ultra, incur substantially higher costs due to their massive computing requirements. Smaller, focused AI models are comparatively cheaper, but they fail to handle complex requests. In short, the more parameters there are, the higher the price paid for computing and training. And more training hours lead to higher costs. Large datasets used for training such complex AI models also add to the higher price for collecting the data, cleaning, and storing, essentially in the cloud.

The cost of computing AI is not just an IT concern; the subject matter has touched the C-Suite. Generative AI, being the key driver, has an unsatiated hunger for massive computing resources. If left unchecked, an explosion of unexpected costs in the future can easily jeopardize companies’ need for innovation.

GenAI leverages generative models to learn patterns and structures from a huge dataset and give birth to new data based on natural language inputs. They are increasingly sought after for developing virtual assistants, video games, and training other AI models.

All LLMs (Large Language Models) are a type of generative AI.

Companies attempting to scale AI are already feeling the heat of surging costs, and budgets are skyrocketing in their competitive advantage endeavor. Some ventures are making strategic bets to pull the throttle back and risk losing the AI race.

For example, statistical figures show that an average of 15% of projects have been put on hold.

Therefore, in order for businesses to calculate the true business impact, owners have to go beyond the obvious and understand the real economics of AI models.

A few helpful suggestions put across by experts can help address the conundrum for enterprises who wish to leverage the offerings of AI models but are hesitant to step beyond their budget. For instance, shrinking the size and scaling the speed of LLMs can help reduce the cost of running the AI models.

Another example would be quantization. This will reduce the model’s memory and speed up training to cut down hardware costs. Hence, the models will become affordable for deployment and usage.

In recent news, OpenAI CEO Sam Altman stated that artificial intelligence, or AI model costs, are falling 10 times every 12 months. When seeing the broader picture, the apparent costs of these AI tools seem less than economical; however, narrowing down the lens to actual cost evaluation will bring out some clarity.

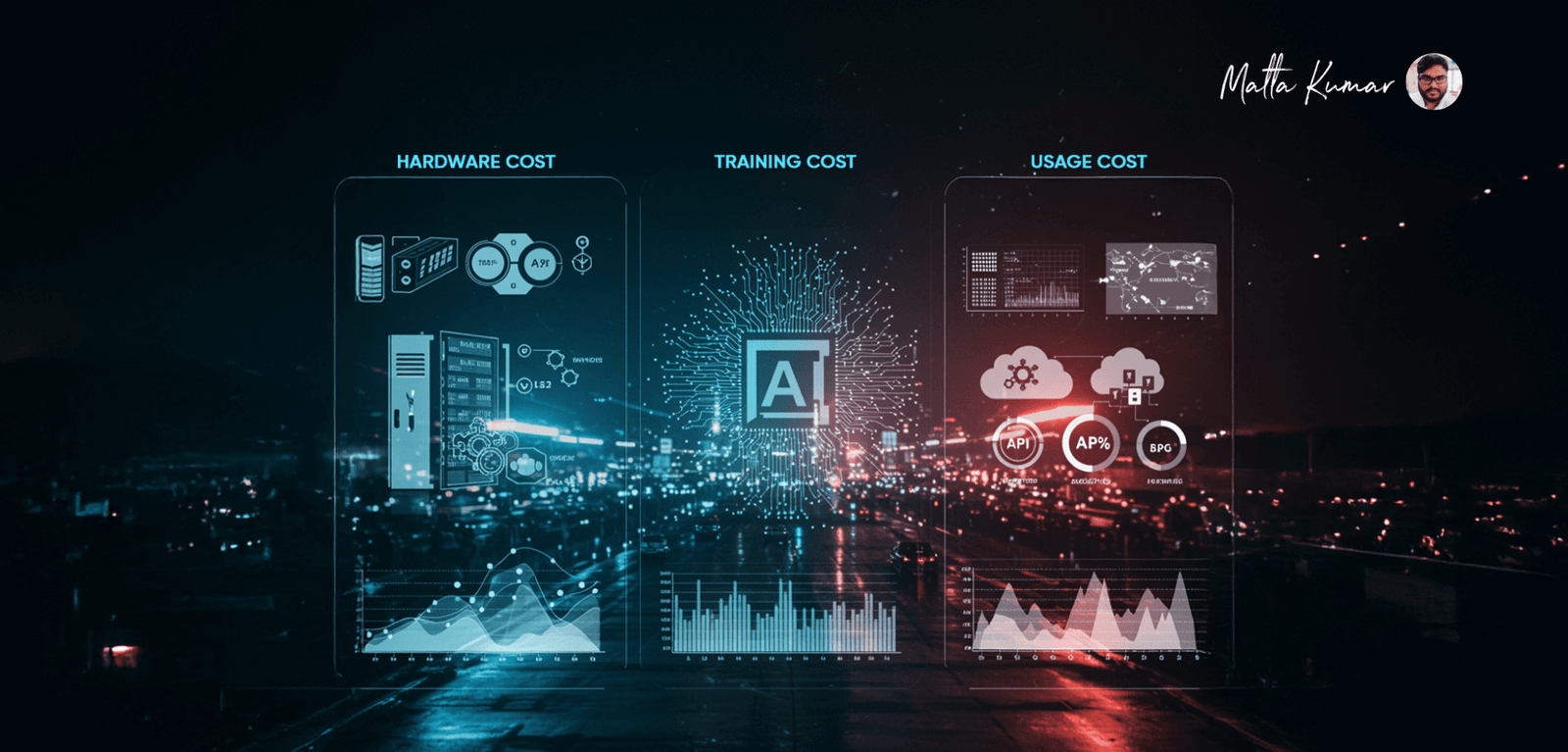

On that note, let’s consider the cost breakdown of AI models:

Ideally, AI models are not as cheap as many might assume from the news bulletins. What is basically getting more affordable is the usage. Infrastructure, computing, and training of AI models will continue in the same range, with subtle variations here and there.

Having said that, let’s now delve deeper into factors influencing the training costs for AI LLMs.

AI agents are evolving and becoming more sophisticated. A perfect blend of cutting-edge technology, domain expertise, and a deep understanding of customers’ needs is required to deploy the fitting AI model. Unfortunately, the more complex the requirements are, the higher the prices for training the AI tools will be. This is a given fact.

Let’s understand which factors are major drivers for AI models demanding higher prices for their computing, training, and deployment:

AI models depend largely on the quantity and quality of massive datasets. This is where the primary cost lies hidden because high-quality and diverse datasets are not exactly cheap. As the models get complex, the requirement for much larger datasets increases. Subsequently, the costs skyrocket without speedbreakers.

Simple and basic AI models are affordable to train compared to advanced deep learning models. Neural networks and reinforcement learning demand more computational power and time. Therefore, higher development and infrastructure costs are expected.

Powerful hardware such as GPUs and TPUs are mandatory for training AI models. Unarguably, cloud computing reduces some costs, but expenses can still accumulate and surge over time.

The success of an AI model depends largely on a skilled workforce. However, AI specialists, data scientists, and engineers are high-priced resources. Most businesses consider outsourcing a cost-effective alternative to building an in-house team.

In order for AI models to perform at par with the expectations, they are put through several training cycles. The latter helps optimise performance. Unfortunately, training cycles are unavoidable and are usually costlier depending upon the training period. Therefore, ongoing expenses become a monthly/yearly ritual for the company.

Choosing between cloud-based services and on-premise infrastructure impacts costs. Cloud platforms offer scalability but require ongoing fees, while on-premise setups involve a high initial investment but can be cost-effective for large-scale projects.

Most AI models are custom-made to attune to specific business needs. And customization is, without a doubt, a costlier endeavor. It requires additional resources, development time, and expertise, thus adding to the overall expenses.

With GenAI in the picture, AI models have become more complex, thus throwing unprecedented challenges to businesses. The struggle of balancing performance with cost efficiency is real. Enterprises have two options ahead – either abandon the AI race or identify ways to keep the staggering costs under check. Experts recommend some basic methods to optimise AI that can convert training and deployment costs in their favor without impacting the model’s effectiveness.

Why train AI models from scratch when pre-trained models are at your disposal to apply transfer learning? This will allow companies to deploy existing models fine-tuned for specific tasks, thus bringing down both training time and computational costs effectively without compromising accuracy.

AI models cannot function in the absence of high-quality data. That said, processing massive datasets doesn’t go easy on the budget. Thankfully, there’s a sliver of hope for businesses through data augmentation. This can expand datasets without additional collection costs. Additionally, smart sampling techniques can be implemented to train existing models with a subset of data to maintain high performance while negating storage and processing requirements.

Hybrid cloud solutions reserved cloud resources are increasingly considered to balance on-premise and cloud infrastructure requirements to optimise costs and scalability.

Model compression is seen as one of the best ways to keep the efficiency and accuracy levels of AI models intact. Pruning, quantization, and knowledge distillation further streamline AI models and lower computational and storage requirements.

AI-driven AutoML (Automated Machine Learning) tools are optimised for model selection, hyperparameter tuning, and deployment. This approach entails minuscule manual intervention. Thus, companies can save their budget by hiring expensive in-house experts without impacting the optimal performance of AI tools.

Choosing the right algorithms for specific tasks can enhance efficiency. Lighter architectures, such as transformer-based models optimised for specific use cases, can reduce the need for high-power computing resources.

Businesses often implement incremental learning or adaptive retraining methods. In this approach, only relevant sections of a model are updated based on new data. Hence, unnecessary computational costs are avoided, but your models remain up-to-date.

A host of smaller AI models, such as Microsoft’s open-source Phi3.5, charge comparatively less for modification and deployment. The upshot of these discoveries is that the general paranoia about expensive foundational models seems misplaced.

The promises are truly inspiring, but a nagging after-thought remains – will the lower price trajectory be sustainable in the future?

Declining AI training costs present opportunities, but it is not a one-size-fits-all solution for business transformation. Also, the current solutions for cheaper AI models do not eliminate other challenges – high operational costs, ethical concerns, regulatory compliance, infrastructure, and skilled talent investments.

Since LLM AI tools have been extensively trained with massive datasets of text from the Internet, books, articles, and other online content, their understanding of semantic context is strong. Besides content ideation and creation, these tools can easily fit different roles, including search engine optimisation. With the help of natural language processing, they can understand and produce optimised content fine-tuned to the latest algorithms and user intent on search engines. However, a natural shift from a traditional keyword-centric approach to more content-driven sophisticated processes is expected. The latter change is anticipated courtesy of generative search engines taking the role of primary platforms for users to search for their queries. Aside from basic organic search optimisation, these tools are trained to manage technical aspects of SEO, including schema markup, link building (internal linking, and enhancing the user experience of websites.

A few examples of their assistance in the field of SEO and Digital Marketing would be:

The practical applications of LLMs in SEO in a nutshell

Despite the benefits, these tools are not 100% perfect but can be strategically converted into more helpful assistants. Here are a few challenges and ways to reverse the situation in your favor.

Here, one must understand that these tools depend solely on data fed into their system. The tools can never truly replace human intelligence regarding understanding the context or delivering knowledge at par with humans. Hence, mindlessly relying on these tools, especially for SEO and other digital marketing activities, can be a significant pitfall. Humans-in-the-loop is needed for fact-checking and nuanced insights extracting for elevated accuracy and relevance for contextual appropriateness. This is something the tools can never replicate.

What they can really do is help your team understand and adapt to changes in SEO algorithms by analysing search trends and user behavior. The rest is up to your team to optimise and benefit from these low-cost LLM tools.

It is genuinely challenging to predict how the future of businesses will shape up with AI. The conundrum of declining training costs vs. rising hidden expenses is likely to continue. Yet, companies can still sail their boats in the same water by licensing AI interfaces, as mentioned before. The former has to pay for the tokens, which is dipping per the statistics. Subsequently, more adoption and usage are likely to follow.

However, big tech companies in the West are all set to develop their own AI tools by pooling more funds and expecting substantial returns. For smaller businesses, integrating early AI models and training them at a cheaper rate could be a fair solution.

Regardless, skeptics will ponder over the usefulness of GenAI to a business’s bottom line or the investment worthiness if higher costs limit the technology stack to dispensing chatbots and summarising text.

Having said that, companies are likely to pursue the high road and make adjustments within their operations to stay on track in the AI race.